“God mode”, for those who aren’t gamers, is a mode of operation (or cheat) built into some types of games based around shooting things. In God mode you are invulnerable to damage and you never run out of ammunition.

Artificial Intelligence, Autonomous Drones and Legal Uncertainties

Artificial Intelligence, Autonomous Drones and Legal Uncertainties

Artificial Intelligence, Autonomous Drones and Legal Uncertainties

Drones represent a rapidly developing industry. Devices initially designed for military purposes have evolved into a new area with a plethora of commercial applications. One of the biggest hindrances in the commercial developments of drones is legal uncertainty concerning the legal regimes applicable to the multitude of issues that arise with this new technology. This is especially prevalent in situations concerning autonomous drones (i.e. drones operating without a pilot). This article provides an overview of some of these uncertainties. A scenario based on the fictitious but plausible event of an autonomous drone falling from the sky and injuring people on the ground is analysed from the perspectives of both German and English private law. This working scenario is used to illustrate the problem of legal uncertainty facing developers, and the article provides valuable knowledge by mapping real uncertainties that impede the development of autonomous drone technology alongside providing multidisciplinary insights from law as well as software electronic and computer engineering.

I. Introduction: the rapid growth of drone technology

Unmanned aerial vehicles, commonly referred to as “drones”,1 offer enormous potential for developing innovative civil applications in a wide variety of sectors.2 According to data gathered by the Commission of the European Union (EU), within twenty years the European drone sector alone is expected to employ directly more than 100,000 people and to have an economic impact exceeding €10 billion per year.3

The lack of a coherent regulatory regime was identified early on as a potential hindrance for the development of this new technology. The EU Commission, in its 2014 Communication on how to make Europe a global leader in the drone industry, said that investments would be delayed “until sufficient legal certainty on the legal framework is offered”.4

One of the key proposals outlined in the Communication was that the EU should develop common rules for all drone operations. A year later, the EU Commission adopted a new aviation strategy for Europe. 5 This strategy included a plan on how to address future challenges faced by the European aviation sector and how to improve the competitiveness of the aerospace industry. The plan included a proposal for a revision of the so-called “Basic Regulation”, which brought all aircraft, including drones, under EU competence and established the European Aviation Safety Agency (EASA).6

The Basic Regulation defines unmanned aircraft as “any aircraft operating or designed to operate autonomously or to be piloted remotely without a pilot on board”.7 While it defines the concept of unmanned aircraft and provides rules for their design, production, maintenance and operation, it does not provide legal certainty concerning a multitude of legal issues, such as civil liability, privacy or administrative and criminal law – issues that are largely regulated in domestic law.8

This article will provide an overview of some of the uncertainties faced through the operation of autonomous drones in general and according to a pan-European research project on autonomous drones in particular (the Risk-Aware Autonomous Port Inspection Drones (RAPID) project).9 The authors use this project to illustrate the legal uncertainties facing developers of autonomous drone technology, focusing in particular on civil liability. By combining insights from legal scholarship and software electronic and computer engineering, the article provides a real-world perspective of how legal uncertainty may affect technological innovation and development. The legal analysis focuses mainly on German and English private law. These represent two of the six jurisdictions of the partners (also including Belgium, France, Ireland and Norway) involved in the ongoing project, as well as two major legal traditions, viz. civil and common law. The authors argue that despite the establishment of common rules for the registration, certification and conduct for drone operators within the EU, the lack of legal certainty on a number of other issues still stifles drone development, undermining the ambition of the EU to become the global leader in this rapidly evolving industry. In order to make this argument, the article uses a fictious but not unlikely scenario of an autonomous drone – that is to say, a drone operating without a pilot and directed on the basis of machine learning – falling from the sky and injuring people on the ground. This scenario is analysed from the perspectives of German and English private law.

The UK left the EU on 31 December 2020 and thus is no longer subject to EU law nor part of EU institutions, such as the EASA. The UK will still be used as an example, however, since many of the problems faced by the EU are still relevant to the UK even after it has regained legislative authority over civil aviation upon leaving the EU. Much of the existing EU legislation still applies in domestic UK law as “retained EU law”. Thus, while the relevant EU regulations no longer formally apply to the UK, their provisions have been made part of UK law and will stay part of UK law for the foreseeable future.10

Before detailing the working scenario, which forms the basis of this article, it is useful to provide some information about the RAPID project. Section II provides information about the project, as well as more generally about Artificial Intelligence (AI) and machine learning. Section III outlines some of the broader legal uncertainties in the operation of autonomous drones, whereas Section IV focuses on the working scenario, which is analysed from the English and German perspectives in Sections IV.1 and IV.2, respectively. The article then concludes in Section V that the level of uncertainty and fragmentation in the law on drones stifle the further rolling out of the technology in new situations, such as those envisaged by the RAPID project and other autonomous uses of this new technology.

II. The Risk-Aware Autonomous Port Inspection Drones project

The RAPID project seeks to extend the use of commercial drones’ capabilities to provide an early warning system that will detect critical deterioration in transport system infrastructure while minimising system disruption and delays to critical supply chains.11 It already adopts approved and commercially available drones in four use case scenarios: bridge inspection, ship emission monitoring, ship hull inspection and emergency responses. Importantly, the difficulty of clearly identifying and applying the relevant rules in these scenarios is likely also to be mirrored in other situations explored by other operators, meaning that the lessons learnt in the context of the RAPID project are relevant more generally.

In each of the four scenarios a single drone or swarms of autonomous drones will take off and navigate through a potentially cluttered urban environment to arrive at and return from a designated target, such as a bridge or ship. While the technology to accomplish this is within reach of the project, its deployment is impeded by legal challenges, as highlighted in this article.

The most important challenge for operating drones in Europe is legal uncertainty. The development and use of autonomous drones raises a bewildering number of legal issues, which are governed by an equally complex set of rules derived from domestic, regional and international law. Most international conventions that apply to civil aviation are, for example, limited in their ability to effectively regulate drones. One such example is the 1944 Convention on International Civil Aviation, which “provides an overarching and underpinning legal framework for international civil aviation”.12 While the terms of the 1944 Convention are broad enough to encompass drones,13 the Convention only applies to international aviation.14 Purely domestic flights, by drones or other aircraft, are therefore not regulated by this Convention.15 Additionally, the 1944 Convention is generally read to prohibit drones, in particular autonomous drones, from flying over another state’s territory without its permission.16

Several international bodies are formulating proposals for new rules on drones. Among them is the International Civil Aviation Organization (ICAO), a worldwide regulator, as well as national and regional actors, such as EASA and the US Federal Aviation Administration (FAA).17 In addition to these, there are numerous other actors, such as the European Organisation for Civil Aviation Equipment (EUROCAE), the Joint Authorities for Rulemaking on Unmanned Systems (JARUS), the European Defence Agency and the European Space Agency, as well as the drone manufacturing industry and operators, which have both adopted industry standards and sought to affect the official law-making process. Despite the numerous actors involved, detailed global or even regional rules concerning autonomous drones are still a long way off. For the time being, national laws must fill gaps that international and regional rules have not yet filled. This article explores some of the current impediments, focusing on the inspection of bridges, but identifying issues common to many proposed use cases for drones.

Before analysing this scenario, it is useful to provide some clarity on AI and machine learning, which seem somewhat misunderstood by the public.18 Thus, to better understand some of the legal uncertainties that arise with the use of autonomous drones, the next section describes some key features of AI and machine learning.

1. Artificial intelligence, machine learning and computer vision for autonomous drone systems

There are myriad technologies that may be classified under the broad spectrum of the term “AI”. Generally defined, these are systems that behave as though they are intelligent19 (ie they have the ability to acquire and apply knowledge and skills20). Autonomous drone systems must exhibit certain behaviours that can be broadly classified as “intelligent” in order to be effective. For instance, an autonomous system capable of operating in the abovementioned scenarios must be able to take decisions without the (apparent) intervention of another independent actor. A drone following a pre-planned path to a bridge might encounter an unexpected obstacle, such as an oncoming ship, in its path and “decide” to increase its altitude to avoid a collision. While this behaviour appears autonomous and by extension intelligent, these types of adaptation rules can be programmed into the set of available responses of a system when faced with a class of external stimuli, like an oncoming ship. The system also must have self-adaptive behaviour (ie the capacity to modify its own behaviour dynamically and “learn” from its environment).21 A drone flying through a cluttered urban environment might lose satellite navigation and change its navigation to rely on its internal sensors to estimate its real-world position until it recovers satellite signal. In that example, the navigation behaviour has changed after the system was able to detect a variation in the environment (in this case, loss of satellite navigation signal).

The key issue to be drawn from these examples is that while the behaviours appear “intelligent” through the acquisition and application of knowledge, they are in fact the result of the design, programming and deployment of the cyber-physical system. The challenge lies in the fact that when autonomy and self-adaptation capabilities are combined with an increasingly challenging environment, the number of possible emerging behaviours that could arise is so great that it is not feasible for these behaviours to be managed by current systems engineering practices.22 That is to say, the reactions of the drones become unpredictable by the human operator.

A key underlying technology that enables autonomy and self-adaptation is machine learning. This is a subfield of AI that deals with developing algorithms that improve autonomous systems capabilities automatically.23

2. How autonomous drones learn

An overall imperative for the widespread adoption of autonomous drones is safety. This means that autonomous drones must be able to detect and avoid (D&A) any potential obstacle in their flight path, and this must happen without the intervention of any operator. Current state-of-the-art D&A capabilities support operators in their drone operations by facilitating the identification of hazards that could result in a collision. D&A capabilities rely on computer vision algorithms to “sense” the environment. To give a better understanding of how autonomous drones learn and operate, we will now describe how these algorithms are designed and deployed.

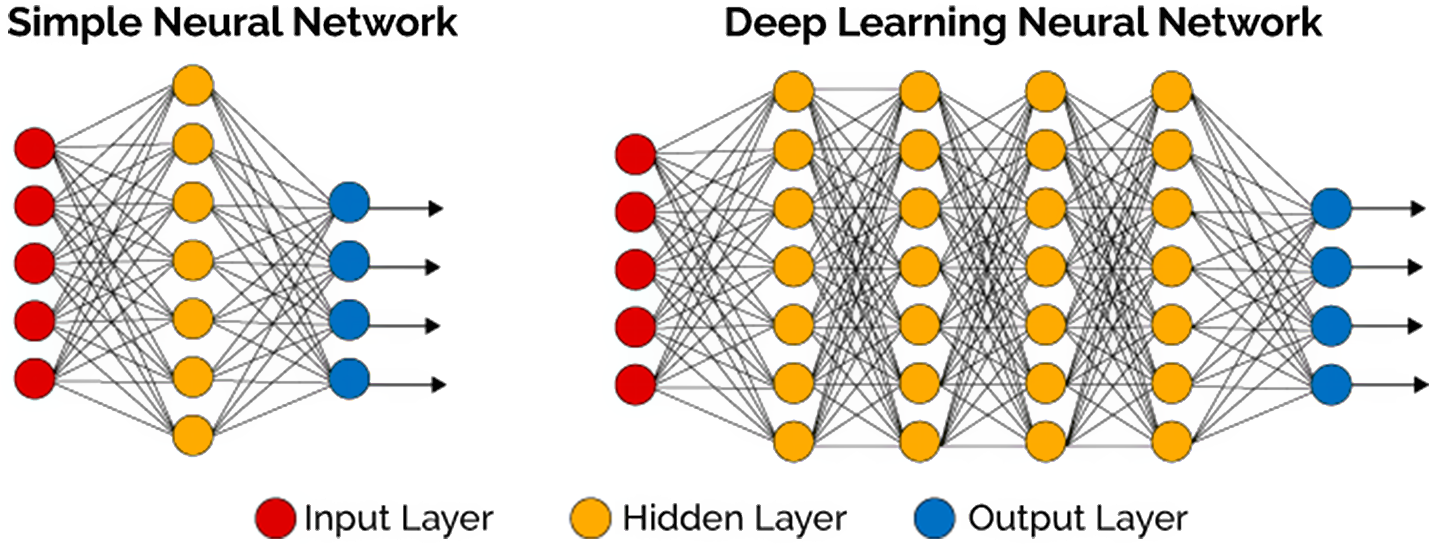

Computer vision algorithms rely on a specific type of algorithm known as artificial neural networks (ANNs) or, most recently, deep learning neural networks (DLNNs). From a design perspective, these two types of networks have the same elements (see Figure 1). First is an “input layer” where the “sensed” image is sent to the ANN/DLNN. This “sensed” image is, in principle, annotated,24 which means that another agent – typically a human – has provided a “ground truth” that describes the image. For example, an image of a bird is described as a bird, and this annotation functions as a connection between the input and output layers. Each connection is weighted, and these weights are modified during the training process. Second, there must be an “output layer” that provides the result of the classification process.

Figure 1. A simple artificial neural network and a deep learning neural network.

DLNNs depend on modern computing resources in order to have multiple hidden layers. This has enabled the recent expansion of the application domains and capabilities of computer vision and machine learning. There is an assumption that the number of hidden layers improves the classification capacity of the network.25

Training is the process by which the ANN/DLNN is stimulated with a dataset (a huge set of annotated input images) and, for each item in the dataset, the output is compared against the annotated ground truth. The weighting of the connections is adjusted at the end of each iteration – through a pre-set error-based mechanism – and the process is repeated until the capacity to recognise objects correctly in an image is within the acceptable range of accuracy. Therefore, the expected classification behaviour “emerges” through several training iterations. The key to a successful deployment of an ANN/DLNN in the field is that the network is able to classify properly not only known inputs (samples it has seen in the training dataset) but also previously unseen inputs that are present in the deployment environment, such as a bird flying near the drone. This capability is known as under- or overfitting. A neural network model is underfitting if it fails to perform to acceptable accuracy in the training dataset. However, a neural net is overfitting if it performs to acceptable accuracy in the training dataset but fails to maintain this capability when faced with previously unseen input.26

Currently, the possible variations of the ANN/DLNN architectures are known from the literature. The key element for achieving correct image recognition within acceptable accuracy relies on having a rich dataset. The capacity to acquire huge volumes of data is a constraint on the capacity to train an ANN/DLNN and is also the main source of bias in the neural network’s capabilities: the sheer variation in the deployment environment is difficult to address during the training process.

III. Legal uncertainties

Although many drone operators use drones that can be bought off the shelf, this does not mean that there are no rules with which their operators must comply. Just as anyone using a bicycle has to comply with traffic rules and is consequently responsible for any damage caused to third parties, users of drones must comply with applicable rules, such as the EU’s Basic Regulation (or retained legislation in the UK) and domestic criminal and tort law in the country where the drone is being operated. As already noted, the use of drones, and especially autonomous drones, raises a bewildering number of legal issues that are regulated in an equally bewildering set of rules derived from national, regional and international law. For the operation of drones, legal uncertainty is not created by the absence of rules; rather, it is created by the lack of clarity surrounding the applicability of the existing rules to this new technology, as well as by divergence amongst the different domestic legal regimes.

Another factor that raises issues is the capability of drones to take seemingly autonomous decisions, and the treatment of this capability by the law. Law is per definition anthropocentric. Humans are the primary subject and object of norms that are created, interpreted and enforced by other humans. As machines become ever more intelligent and autonomous, lawmakers and courts will face increasingly complex dilemmas when regulating the autonomous conduct of these machines.27 In 2019, a report by the Expert Group on Liability and New Technologies, established by the EU Commission, noted the following: “The more complex these ecosystems become with emerging digital technologies, the more increasingly difficult it becomes to apply liability frameworks.”28

The complex legal issues raised by AI are further exacerbated by that fact that there is no universal definition of AI or autonomy. In fact, although the Basic Regulation seeks to regulate any aircraft operating or designed to operate “autonomously”, it does not define the term.

It is moreover contentious whether AI may ever truly replicate human levels of intelligence. If AI systems ever acquire this ability, this would raise questions concerning their legal status and whether they could have legal personality (ie being recognised in law as independent actors with their own rights and responsibilities). However, there is nothing new about non-human actors having rights and obligations. As noted by Lord Sumption: “The distinct legal personality of companies has been a fundamental feature of English commercial law for a century and a half.”29 Corporations even have limited human rights.30 As for now, the issue of legal personality for AI systems remains controversial, but the aforementioned Expert Group noted that there was “currently no need to give a legal personality to emerging digital technologies”,31 as “[h]arm caused by even fully autonomous technologies is generally reducible to risks attributable to natural persons or existing categories of legal persons, and where this is not the case, new laws directed at individuals are a better response than creating a new category of legal person”.32

Despite not having legal personality, the use of autonomous systems still presents certain legal problems, and the more autonomous in its decisions and actions a system is, the more likely it is that it will become more difficult to apply anthropocentric law to a situation where damage is caused by this autonomous system.33

EU rules have created a certain level of harmonisation concerning the operation of drones in the airspace of EU Member States. Thus, Commission Implementing Regulation 2019/947 (on the rules and procedures for the operation of unmanned aircraft) and Commission Delegated Regulation 2019/945 (on unmanned aircraft systems and on third-country operators of unmanned aircraft systems) provide harmonised provisions for drone operations. Commission Implementing Regulation 2019/947 divides the operation of drones in the EU into three categories: “open”, “specific” and “certified”, depending inter alia on the risk level involved in operating the drone in question.34 Autonomous drones as used for the RAPID project fall under the “specific category”, as these drones are operated without a pilot.35 The operation of drones in the specific category requires the operator of a drone to obtain a special authorisation prior to operation.36 It is the EU Member States themselves (or in the case of the UK the Civil Aviation Authority (CAA)) that are responsible for enforcing the regulation and for issuing these authorisations for operations in the so-called “specific” category.

Regulation 2019/947 further requires that:

… [drone] operators and remote pilots should ensure that they are adequately informed about applicable Union and national rules relating to the intended operations, in particular with regard to safety, privacy, data protection, liability, insurance, security and environmental protection. 37

Despite the obligation this creates for operators and remote pilots to stay informed about the relevant legal regimes, the Regulation provides no further guidance on which and how extant rules apply to drones, in particular when it comes to civil liability arising from accidents caused by autonomous drones. As noted by Masutti:

What is missing is a liability regime, insurance and privacy protection [concerning drones]. The absence of a clear legal framework has already pushed European institutions and international organisations to address several guidelines in order to proceed in setting up a new framework regulation on this topic. The legal instruments for reaching this goal unfortunately remain unclear38

These are but some of the uncertainties raised by this new technology. Some of the existing uncertainties have already been addressed in the literature, such as the application of extant aviation rules to drones.39 Other issues have received noticeably less attention, such as the issue of civil liability, the associated connection to privacy and data protection40 and issues of administrative and criminal law. These are issues that are predominately, though not exclusively, dealt with in domestic law. The following section focuses on a working scenario of an autonomous drone falling from the sky and the legal consequences in the UK (focusing only on English and Welsh private law) and Germany. These two jurisdictions are chosen not only because they are part of the RAPID project, but also because each is an exemplar of one of the two major legal traditions: common law and civil law.

This article was republished from the Cambridge University Press under a Creative Commons license. Read the original article.

University of Dundee, School of Law, Dundee, UK: Jacques Hartmann and Eva Jueptner • University of the West of Scotland, Glasgow, UK: Santiago Matalonga, James Riordan, and Samuel White

Related Articles

Understanding the building blocks for Australia’s quantum future

Australia is undergoing an exciting period of strategic technology policy review and development. The release of its first National Quantum Strategy this week committed the government to building the world’s first error-corrected quantum computer. This is a strategically important technology that has the potential to improve productivity and supply chain efficiency in diverse industries, lower costs across the economy, help reduce carbon emissions and improve public transportation.

Japan needs stronger deterrence than its new defense strategy signals

Since World War II, Japan had long chosen not to possess long-range strike capabilities that could be used against enemy bases. But the Japanese government changed course in December 2022 when it adopted the new national defense strategy (NDS), which included a commitment to acquiring a so-called counterstrike capability. But in order for this new strategy to contribute to deterrence and alter the nation’s defensive role as the ‘shield’ in its alliance with the United States, Tokyo needs to go further than what the NDS outlines.